Selama ini kita biasa menggunakan

Google Code untuk menyimpan maksimal hanya tiga jenis berkas/file saja. Yaitu JavaScript, ZIP dan gambar. Selain itu tidak pernah. Alasannya, saat file selain JavaScript, ZIP dan gambar yang tersimpan di Google Code dibuka maka akan gagal bekerja. Misalnya file CSS atau HTML. Saat dibuka malah hanya muncul sebagai teks biasa dan tidak bisa digunakan sebagaimana file CSS dan HTML pada normalnya.

Biasanya kita mengunggah file melalui situs Google Code secara langsung, atau bisa juga melalui aplikasi subversi seperti

TortoiseSVN. Kabar baiknya, dengan cara mengatur MIME yang beragam pada aplikasi tersebut, maka itu akan memungkinkan kita untuk mengunggah berbagai file dengan format yang berbeda-beda. Sehingga direktori Google Code tidak lagi tampak sebagai ruang penyimpanan file berupa kode saja, tetapi juga bisa kita gunakan untuk menyimpan berbagai macam file seperti halnya situs-situs penyedia layanan hosting file pada umumnya.

Saya masih belum begitu berani untuk mengungkapkan apa itu pengertian MIME. Tapi kalau kamu ingin mencari tahu lebih jauh, kamu bisa membacanya di

Wikipedia - MIMEDengan mendefinisikan properti otomatis pada aplikasi, maka kita bisa mengunggah beberapa file yang bisa dibilang sama sekali tidak ada hubungannya dengan kode seperti PDF, DOC, SWF (Flash), WAV dan lain-lain.

Untuk mengubah masukan berkas Google Code menjadi lebih luas, maka ada dua buah pekerjaan yang harus kita lakukan. Pertama adalah menginstal aplikasi TortoiseSVN dan ke dua adalah membuat sebuah halaman proyek baru.

Pekerjaan I: Menginstal TortoiseSVNKunjungi situs ini ⇒

http://tortoisesvn.net/downloads.html. Unduh aplikasi tersebut lalu instal. Setelah terinstal, komputer harus di-restart karena aplikasi ini akan terintegrasi dengan menu konteks/menu klik kanan (shell command).

Setelah itu klik kanan pada desktop. Pilih menu

TortoiseSVN » Settings:

TortoiseSVN » Settings

Akan muncul kotak dialog seperti ini. Klik General dan klik tombol Edit:

[miscellany]

enable-auto-props = yes

[auto-props]

# Scriptish formats

*.bat = svn:eol-style=native; svn:keywords=Id; svn-mine-type=text/plain

*.bsh = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/x-beanshell

*.cgi = svn:eol-style=native; svn:keywords=Id; svn-mine-type=text/plain

*.cmd = svn:eol-style=native; svn:keywords=Id; svn-mine-type=text/plain

*.js = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/javascript

*.php = svn:eol-style=native; svn:keywords=Id Rev Date; svn:mime-type=text/x-php

*.pl = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/x-perl; svn:executable

*.pm = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/x-perl

*.py = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/x-python; svn:executable

*.sh = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/x-sh; svn:executable

# Image formats

*.bmp = svn:mime-type=image/bmp

*.gif = svn:mime-type=image/gif

*.ico = svn:mime-type=image/ico

*.jpeg = svn:mime-type=image/jpeg

*.jpg = svn:mime-type=image/jpeg

*.png = svn:mime-type=image/png

*.tif = svn:mime-type=image/tiff

*.tiff = svn:mime-type=image/tiff

# Data formats

*.pdf = svn:mime-type=application/pdf

*.avi = svn:mime-type=video/avi

*.doc = svn:mime-type=application/msword

*.eps = svn:mime-type=application/postscript

*.gz = svn:mime-type=application/gzip

*.mov = svn:mime-type=video/quicktime

*.mp3 = svn:mime-type=audio/mpeg

*.ppt = svn:mime-type=application/vnd.ms-powerpoint

*.ps = svn:mime-type=application/postscript

*.psd = svn:mime-type=application/photoshop

*.rtf = svn:mime-type=text/rtf

*.swf = svn:mime-type=application/x-shockwave-flash

*.tgz = svn:mime-type=application/gzip

*.wav = svn:mime-type=audio/wav

*.xls = svn:mime-type=application/vnd.ms-excel

*.zip = svn:mime-type=application/zip

# Text formats

.htaccess = svn:mime-type=text/plain

*.css = svn:mime-type=text/css

*.dtd = svn:mime-type=text/xml

*.html = svn:mime-type=text/html

*.ini = svn:mime-type=text/plain

*.sql = svn:mime-type=text/x-sql

*.txt = svn:mime-type=text/plain

*.xhtml = svn:mime-type=text/xhtml+xml

*.xml = svn:mime-type=text/xml

*.xsd = svn:mime-type=text/xml

*.xsl = svn:mime-type=text/xml

*.xslt = svn:mime-type=text/xml

*.xul = svn:mime-type=text/xul

*.yml = svn:mime-type=text/plain

CHANGES = svn:mime-type=text/plain

COPYING = svn:mime-type=text/plain

INSTALL = svn:mime-type=text/plain

Makefile* = svn:mime-type=text/plain

README = svn:mime-type=text/plain

TODO = svn:mime-type=text/plain

# Code formats

*.c = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

*.cpp = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

*.h = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

*.java = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

*.as = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

*.mxml = svn:eol-style=native; svn:keywords=Id; svn:mime-type=text/plain

# Webfonts

*.eot = svn:mime-type=application/vnd.ms-fontobject

*.woff = svn:mime-type=application/x-font-woff

*.ttf = svn:mime-type=application/x-font-truetype

*.svg = svn:mime-type=image/svg+xml

Simpan perubahan yang kamu lakukan. Biarkan sampai di sini dulu.

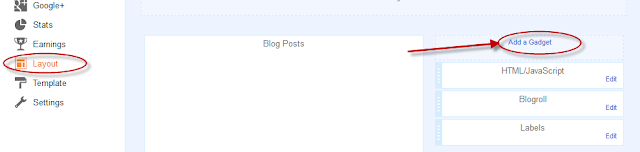

Pekerjaan II: Membuat Halaman Proyek BaruJika belum punya akun Google, buat terlebih dahulu agar bisa mendapatkan akses ke Google Code. Kunjungi

http://code.google.com, lalu klik

Create A New Project:

Buat proyek baru.

Isi formulirnya, atur pilihan version control system ke Subversion, lalu pilih lisensi yang kamu kehendaki untuk

file-file yang diunggah di situ:

Mengisi formulir.

Klik Create Project. Kamu akan dibawa menuju halaman

dasbor proyek barumu jika sudah berhasil. Klik tab Source lalu klik tautan

googlecode.com password untuk membuat password. Ini digunakan untuk menghubungkan aplikasi dengan proyek Google kamu:

Mengenerasikan password Google Code.

Catat password yang tampil:

Password —dan alamat email— sudah digenerasikan.

Mulai MengunggahBuka aplikasi TortoiseSVN dengan cara mengeklik kanan pada desktop lalu pilih TortoiseSVN » Repo Browser:

Repo browser.

Akan muncul kotak dialog untuk memasukkan URL proyek:

Memasukkan URL proyek.

Pola URL selalu berbentuk seperti ini:https://nama_proyek.googlecode.com/svn/trunk/

Yang harus diingat dan yang paling sering membuat gagal dalam pengunggahan file: Saat menuliskan URL proyek di aplikasi, gunakan

https, tapi saat ingin melihat hasilnya atau mengakses hasil kerjanya secara online, gunakan

httpKlik OK. Tunggu sampai proses memuat selesai.

Buka folder dimana terdapat file yang ingin diunggah. Seret file yang diinginkan ke area daftar file unggahan:

Mengunggah file.

Tunggu beberapa saat, maka kamu akan diminta untuk mengautentikasikan akun Google Code kamu seperti

ini:

Autentikasi akun Google Code.

Isi alamat email dan password yang sebelumnya sudah kamu catat. Centang Save authentication agar kita tidak perlu berkali-kali login pada saat pengunggahan file di masa mendatang.

Dengan menggunakan aplikasi subversi, selain bisa mengunggah berbagai file yang tidak biasa, kita juga bisa mengedit file yang sudah kita unggah. Sehingga kita tidak perlu menghapus file lama dan mengunggah file yang baru berulang kali seperti saat menggunakan akun Google Code pada umumnya.

Untuk memeriksa hasil kerja satu per satu secara online, akses pola URL ini:

http://nama_proyek.googlecode.com/svn/trunk/Kita juga bisa mengedit (hanya mengedit) file melalui situs Google Code secara langsung (jika kita memberikan izin pada pengaturan pengeditan secara online). Kunjungi pola URL ini:

http://code.google.com/p/nama_proyek/source/browse/trunk/Thanks to Taufik Nurrohman for great Article :

Hosting File dengan Google Code dan TortoiseSVN